The Challenge of Emotional Expression in Generative AI Portraits

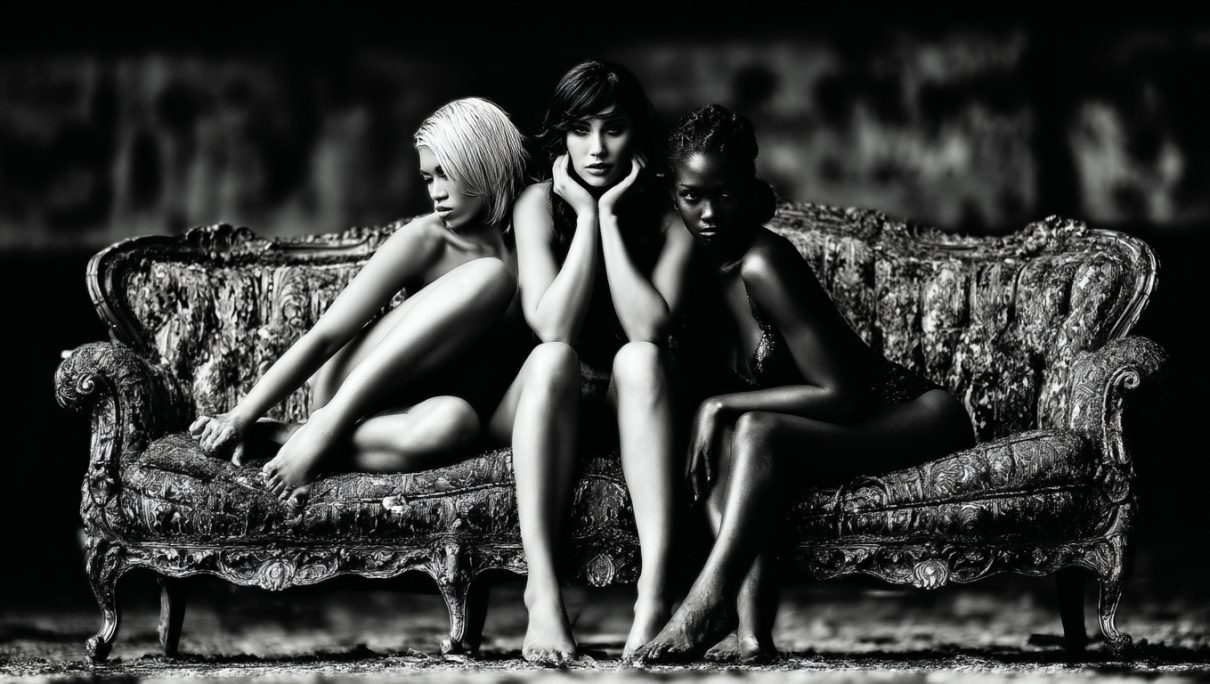

Generative AI has made remarkable strides in creating realistic and artistic images, but one area where many engines still struggle is conveying genuine emotional expression. Even with highly descriptive prompts—such as „a woman with tearful eyes, her lips trembling in sorrow as she clutches a faded photograph“—the resulting image often falls short of capturing the intended depth of feeling.

„ChatGPT is doing photo real images“ — „… Hold my coffee!“

Websterix

Why AI Lags in Emotional Expression

-

Limited Understanding of Nuanced Emotions

While AI models like DALL·E, MidJourney, and Stable Diffusion excel at generating visually coherent images, they lack true comprehension of human emotions. They rely on patterns in training data rather than lived experience, making it difficult to translate abstract emotional cues into convincing facial expressions or body language. -

Over-Reliance on Stereotypes

Many AI systems default to exaggerated or clichéd expressions (e.g., over-the-top smiles or cartoonish sadness) because they learn from widely available but often unrealistic depictions in stock photos and artwork. Subtle emotions—such as bittersweet nostalgia or quiet despair—are harder to replicate. -

Inconsistency in Fine Details

Emotional authenticity depends on tiny details: the slight tension in a jawline, the way light catches a tear, or the posture of a subject. AI often misaligns these elements, leading to expressions that feel „off“ or artificial. -

Text-to-Image Translation Gaps

Even with precise prompts, the AI may prioritize visual accuracy over emotional resonance. For example, it might correctly render „tearful eyes“ but fail to harmonize that detail with the rest of the face, resulting in an uncanny or emotionless gaze.

The Path Forward

Improving emotional expression in AI-generated portraits will require:

-

Better training data with more diverse, nuanced emotional references.

-

Advanced emotion modeling, possibly integrating psychology-based frameworks.

-

User feedback loops where artists refine outputs by highlighting successful emotional cues.

For now, creators must often manually tweak AI outputs or blend multiple generations to achieve the desired emotional impact. While AI is rapidly evolving, true emotional depth in synthetic portraits remains a frontier yet to be fully conquered.